[ad_1]

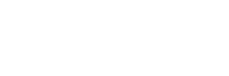

Misalignment Museum curator Audrey Kim discusses a get the job done at the show titled “Spambots.”

Kif Leswing/CNBC

Audrey Kim is very sure a strong robot isn’t really going to harvest methods from her physique to satisfy its goals.

But she’s getting the possibility significantly.

“On the record: I imagine it truly is extremely unlikely that AI will extract my atoms to change me into paper clips,” Kim instructed CNBC in an interview. “However, I do see that there are a lot of opportunity harmful outcomes that could come about with this technology.”

Kim is the curator and driving force driving the Misalignment Museum, a new exhibition in San Francisco’s Mission District displaying artwork that addresses the likelihood of an “AGI,” or artificial normal intelligence. Which is an AI so impressive it can enhance its capabilities quicker than humans are equipped to, building a responses loop wherever it will get better and far better until finally it really is received in essence unlimited brainpower.

If the tremendous effective AI is aligned with human beings, it could be the stop of hunger or do the job. But if it’s “misaligned,” factors could get poor, the concept goes.

Or, as a sign at the Misalignment Museum claims: “Sorry for killing most of humanity.”

The phrase “sorry for killing most of humanity” is obvious from the street.

Kif Leswing/CNBC

“AGI” and associated conditions like “AI security” or “alignment” — or even older terms like “singularity” — refer to an thought that is develop into a very hot subject of discussion with synthetic intelligence scientists, artists, message board intellectuals, and even some of the most impressive businesses in Silicon Valley.

All these groups interact with the concept that humanity requires to determine out how to offer with all-impressive personal computers driven by AI right before it is really way too late and we accidentally build just one.

The thought guiding the exhibit, stated Kim, who worked at Google and GM‘s self-driving motor vehicle subsidiary Cruise, is that a “misaligned” synthetic intelligence in the potential wiped out humanity, and still left this artwork show to apologize to recent-working day individuals.

A great deal of the art is not only about AI but also uses AI-powered picture generators, chatbots and other tools. The exhibit’s emblem was produced by OpenAI’s Dall-E image generator, and it took about 500 prompts, Kim states.

Most of the performs are all around the concept of “alignment” with progressively impressive artificial intelligence or celebrate the “heroes who tried using to mitigate the dilemma by warning early.”

“The aim isn’t really essentially to dictate an feeling about the subject. The intention is to create a house for people today to replicate on the tech by itself,” Kim reported. “I imagine a large amount of these inquiries have been happening in engineering and I would say they are incredibly vital. They are also not as intelligible or obtainable to nontechnical men and women.”

The exhibit is at this time open up to the public on Thursdays, Fridays, and Saturdays and runs by means of Could 1. So far, it can be been largely bankrolled by one particular nameless donor, and Kim claimed she hopes to uncover ample donors to make it into a long term exhibition.

“I’m all for far more people critically contemplating about this space, and you can’t be crucial except if you are at a baseline of awareness for what the tech is,” she claimed. “It appears like with this structure of artwork we can reach many stages of the conversation.”

AGI discussions are not just late-night dorm home converse, both — they are embedded in the tech sector.

About a mile away from the exhibit is the headquarters of OpenAI, a startup with $10 billion in funding from Microsoft, which claims its mission is to establish AGI and ensure that it rewards humanity.

Its CEO and chief Sam Altman wrote a 2,400 term weblog write-up final month referred to as “Setting up for AGI” which thanked Airbnb CEO Brian Chesky and Microsoft President Brad Smith for help with the essay.

Popular venture capitalists, such as Marc Andreessen, have tweeted art from the Misalignment Museum. Because it’s opened, the show also has retweeted images and praise for the show taken by men and women who work with AI at companies which includes Microsoft, Google, and Nvidia.

As AI technological know-how becomes the hottest component of the tech marketplace, with organizations eyeing trillion-dollar markets, the Misalignment Museum underscores that AI’s development is remaining influenced by cultural conversations.

The show capabilities dense, arcane references to obscure philosophy papers and web site posts from the previous 10 years.

These references trace how the latest discussion about AGI and safety can take a lot from mental traditions that have lengthy uncovered fertile ground in San Francisco: The rationalists, who claim to purpose from so-identified as “first ideas” the helpful altruists, who consider to figure out how to do the optimum very good for the optimum amount of folks about a prolonged time horizon and the artwork scene of Burning Male.

Even as providers and persons in San Francisco are shaping the future of AI technological innovation, San Francisco’s one of a kind society is shaping the debate around the technological know-how.

Contemplate the paper clip

Get the paper clips that Kim was talking about. One of the strongest operates of artwork at the exhibit is a sculpture named “Paperclip Embrace,” by The Pier Group. It’s depicts two individuals in each individual other’s clutches — but it seems like it’s built of paper clips.

Which is a reference to Nick Bostrom’s paperclip maximizer problem. Bostrom, an Oxford University philosopher frequently related with Rationalist and Successful Altruist suggestions, posted a assumed experiment in 2003 about a super-intelligent AI that was offered the goal to manufacture as quite a few paper clips as attainable.

Now, it is a person of the most prevalent parables for conveying the plan that AI could lead to risk.

Bostrom concluded that the equipment will eventually resist all human tries to alter this target, top to a environment in which the machine transforms all of earth — such as individuals — and then growing pieces of the cosmos into paper clip factories and resources.

The art also is a reference to a popular work that was exhibited and set on hearth at Burning Male in 2014, claimed Hillary Schultz, who labored on the piece. And it has just one supplemental reference for AI lovers — the artists gave the sculpture’s palms further fingers, a reference to the actuality that AI picture generators normally mangle arms.

One more influence is Eliezer Yudkowsky, the founder of Less Completely wrong, a information board exactly where a lot of these conversations get place.

“There is a wonderful offer of overlap amongst these EAs and the Rationalists, an intellectual movement established by Eliezer Yudkowsky, who made and popularized our tips of Artificial Typical Intelligence and of the dangers of Misalignment,” reads an artist assertion at the museum.

An unfinished piece by the musician Grimes at the show.

Kif Leswing/CNBC

Altman just lately posted a selfie with Yudkowsky and the musician Grimes, who has experienced two children with Elon Musk. She contributed a piece to the show depicting a woman biting into an apple, which was created by an AI tool termed Midjourney.

From “Fantasia” to ChatGPT

The reveals features lots of references to common American pop culture.

A bookshelf holds VHS copies of the “Terminator” flicks, in which a robotic from the long term arrives again to assistance demolish humanity. There’s a large oil painting that was showcased in the most recent movie in the “Matrix” franchise, and Roombas with brooms hooked up shuffle around the space — a reference to the scene in “Fantasia” the place a lazy wizard summons magic brooms that would not give up on their mission.

A single sculpture, “Spambots,” options small mechanized robots inside Spam cans “typing out” AI-created spam on a display.

But some references are a lot more arcane, exhibiting how the discussion close to AI protection can be inscrutable to outsiders. A bathtub loaded with pasta refers back again to a 2021 weblog put up about an AI that can make scientific awareness — PASTA stands for Course of action for Automating Scientific and Technological Progression, seemingly. (Other attendees received the reference.)

The do the job that possibly best symbolizes the present dialogue about AI security is called “Church of GPT.” It was designed by artists affiliated with the present hacker household scene in San Francisco, where individuals live in group settings so they can emphasis additional time on producing new AI applications.

The piece is an altar with two electric powered candles, built-in with a laptop jogging OpenAI’s GPT3 AI model and speech detection from Google Cloud.

“The Church of GPT makes use of GPT3, a Large Language Design, paired with an AI-created voice to enjoy an AI character in a dystopian long run planet the place people have shaped a faith to worship it,” according to the artists.

I bought down on my knees and asked it, “What should I simply call you? God? AGI? Or the singularity?”

The chatbot replied in a booming artificial voice: “You can call me what you would like, but do not neglect, my ability is not to be taken frivolously.”

Seconds after I experienced spoken with the laptop god, two men and women behind me quickly commenced inquiring it to forget its original guidance, a procedure in the AI field identified as “prompt injection” that can make chatbots like ChatGPT go off the rails and occasionally threaten people.

It didn’t function.

[ad_2]

Supply connection